Table of Contents

General

- Develop in UCLA,

- Set of Java libraries,

- It is not finished; it is not dead code, though.

- There is a rich documentation regarding the algorithms and the implementation.

- Since it is a collection of algorithms, it is necessary to decide which ones are necessary!

- "The focus of this framework is to ease the development of new algorithms and the comparison against existing models." (Jurgens, Stevens).

- "Each word space algorithms is designed to run as a stand alone program and also to be used as a library class." (Jurgens, Stevens).

- The library supports word-document vectors.

- The authors affirm that it can collect more that a context-vector for a single word depending on the semantic meaning (e.g. bank as institution and bank as "Sitztgelegenheit"

)

) - "Libraries provide support for converting between multiple matrix formats, enabling interaction with external matrix-based program".

- SVD and randomized projections.

- From the pictures, scalability of most of the algorithms seems to grow with a linear factor!

- The package is constituted by four type of tools:

- A library (implementation) of commonly used algorithms in semantic spaces.

- Tools for building semantic models

- Evaluation tools (e.g. TOEFL test for synonyms).

- Interaction tools (e.g. queries, etc.).

Installation

- Required Software

- svn (Subversion). Can be installed with a apt-get command:

sudo apt-get install subversion

- To installed the package go to a target directory. The authors recommends to use the following command:

svn checkout http://airhead-research.googlecode.com/svn/trunk/sspace sspace-read-only

- A new directory should have been created. Go to the directory and use the command

ant

. Ant is part of the Apache project and is used to build java libraries. It will automatically detect the file build.html and install from it. I explained here how to install ant.

- If you want to make direct use of the .jar, you would also like to use the command

Testing

- The S-Space package supports reading and writing several matrix file formats. Among those supported are

- SVDLIBC text, sparse text, binary and sparse binary

- Matlab and Octave dense text and sparse text formats

- CLUTO sparse text

- The package provide an user interface, i.e., a class to used S-package from the terminal.

- The package provide utilities to process 'raw text', meaning this that these utilities presuppose corpus pre-processing! The user might select how the text files are structures, i.e., a single string data file, as files, etc. Check here for a short tutorial.

— Eduardo Aponte 2010/11/16 10:38

First Trial

- The trials I am performing now are based only on using the already developed .jar files and executing the programs from the command lines, i.e., doing no hacking on any class

- I began with a very simple trial on the whole corpus using LSA without threads. As expected, after 20 minutes the processes finished with a memory error.

Second Trial

- I ran the following command on the corpus

java -jar /net/data/CL/projects/wordspace/software_tests/sPackage/sspace-read-only/bin/lsa.jar -dwp500_articles_hw.latin1.txt.gz -X200 -t10 -v -n100 results/firstTry.sspace

This command should read 200 documents from the corpus (the first 200 lines), using 10 threads (no idea how this would take place) and use svd (default set) with 100 dimensions. I didn't check memory, although I allowed a verbose terminal output.

- Nicely I got the following:

FINE: Processed all 200 documents in 0.271 total seconds

- However, as expected, the process got stuck during SVD:

Nov 21, 2010 12:44:10 PM edu.ucla.sspace.lsa.LatentSemanticAnalysis processSpace INFO: reducing to 100 dimensions Nov 21, 2010 12:44:10 PM edu.ucla.sspace.matrix.MatrixIO matlabToSvdlibcSparseBinary INFO: Converting from Matlab double values to SVDLIBC float values; possible loss of precision

Trials with LSA

I performed a number of trials with LSA. This trials where intended to prove the time and memory comsumed by different algorithms compatible with the LSA implementation. Available from the command lines are:

- SVDLIBC

- Matlab

- GNU Octave

- JAMA

- COLT

I didn't make a large review of the implementations and rather start proving every algorithm. As previous results showed, using the default algorithm (SVDLIBC) generated strange results (the angular distance between vectors) was extremely low among close neighbors. Two reason were identified as possible: either, the number of dimensions was to low in relation to the numbers of documents; this would cause that performing SVD would collapse the distance, creating a extremely dense vector-space. A second possibility was a bug in the implementation. My supposition is that, since the implementation requires a pipeline between the internal format of the argument and SVDLIBC, a loss in precision caused the problem. If that were the problem, selecting Matlab for SVD should solve the problem (because the matlab format are the internal format of lsa are identical).

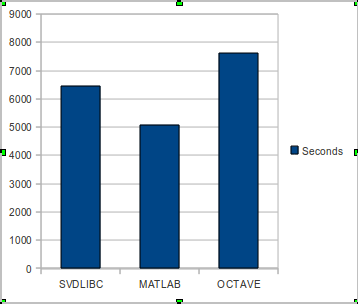

I performed a test with 30000 document and 200 dimensions with SVDLIBC, MATLAB, OCTAVE and COLT. The results were in part disappointing because, with the exception of MATLAB, all other algorithms ran out of memory (in particular, the pipeline between LSA and the SVD algorithm ran out of heap memory).

- SVDLIBC: Ran out of memory at 6459 seconds.

- Matlab: After 5083 seconds returned a 450Mb .sspace file

- GNU Octave: Ran out of memory at 7624 seconds

Visual inspection suggest that problems regarding the density of the vector space are solved by using MATLAB as the defauld algorithm.

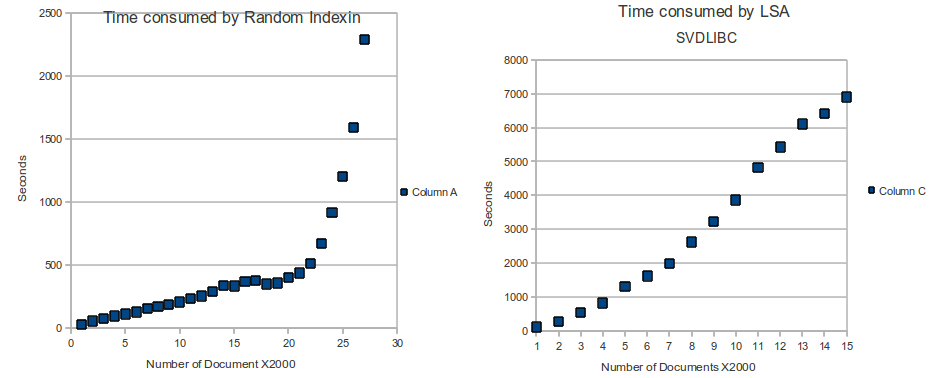

Finally, I compared the scalability of Random Indexing and LSA (using SVDLIBC with 100 dimension):

It is clear that LSA can hardly handle large corpora. Although the results are different in the case of Random Indexing, they suggest a similar conclusion.

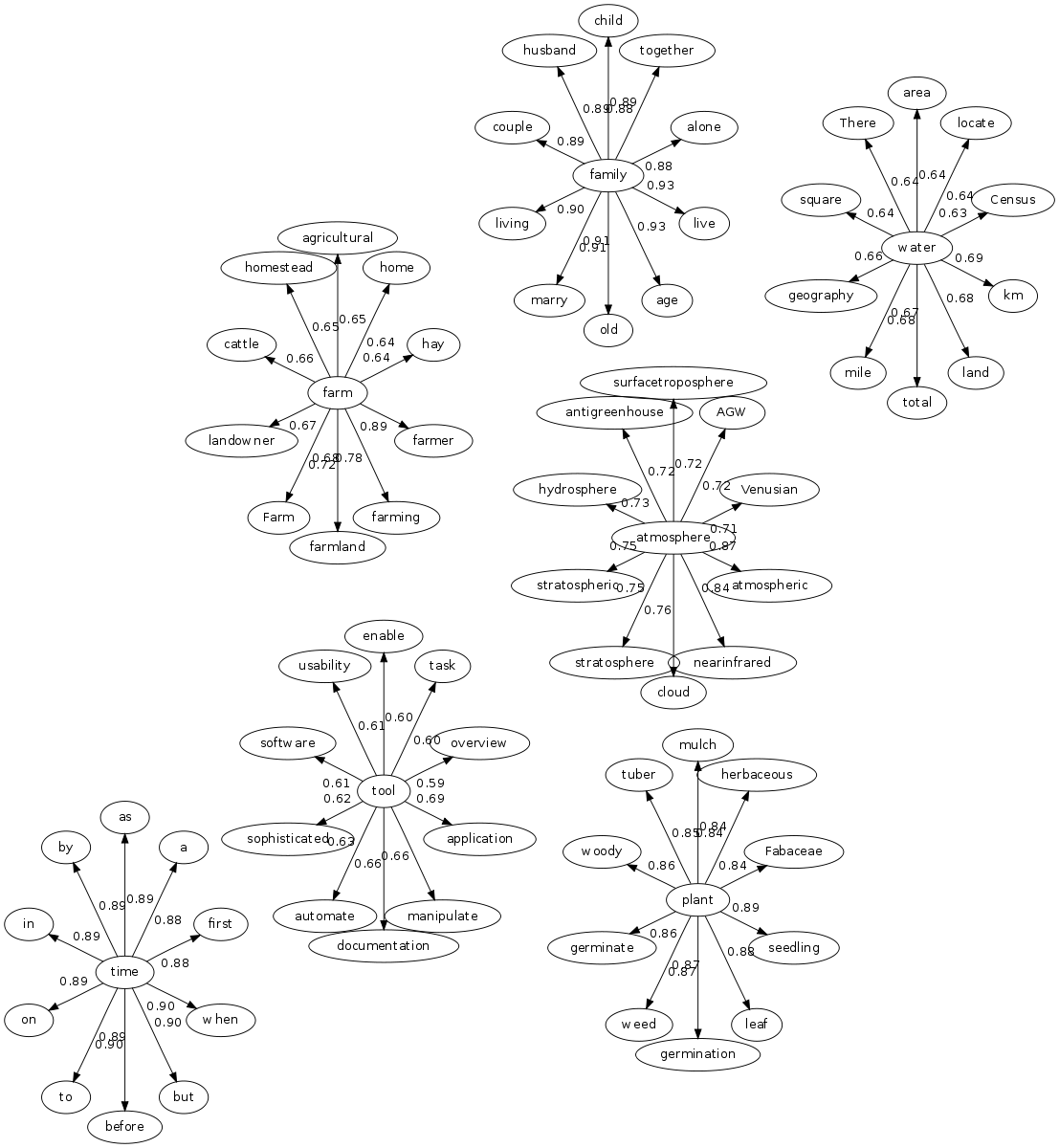

I wrote a simple script that automatically document the results of every experiment. It can be found under the key name "myScript.sh" in the corpora directory. The results are documented in the directory statistics. A python script automatically generates a graphviz representation in the directory vizImages. Since it is intended to be used with twopi, it has this very extension.